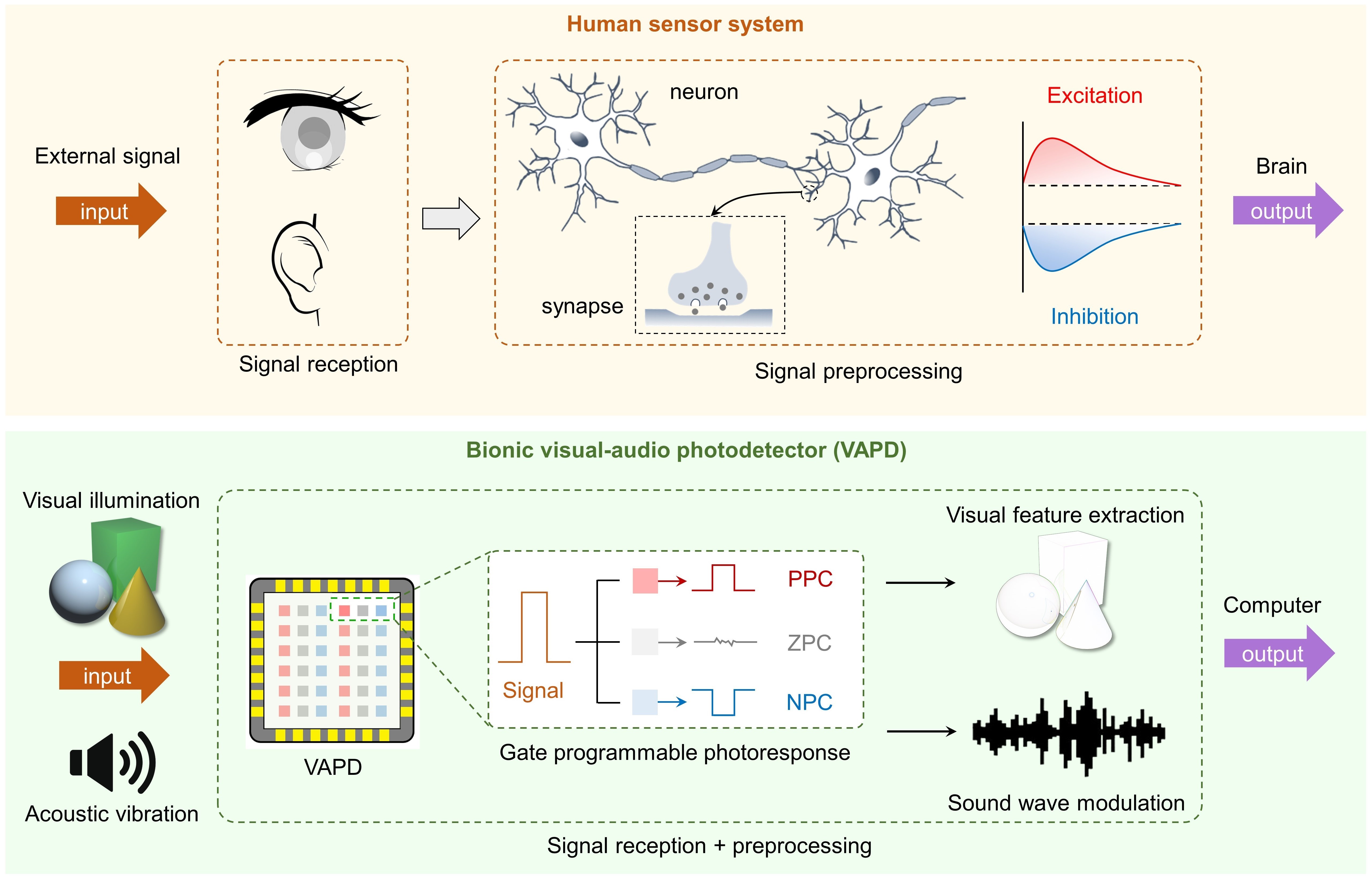

Figure 1: Schematic diagram of the working principle for the bionic audio-visual photodetector that imitates the human perception system. PPC, positive photocurrent; ZPC, zero photocurrent; NPC, negative photocurrent.

Eyes and ears, as two crucial organs of vision and hearing, play indispensable roles in human perception of external information. Traditional sensing technologies rely on independent optical and acoustic detection devices. The data obtained by the detection modules are often filled with redundant background interference information, imposing significant pressure on data transformation, transmission, and storage, thereby severely restricting the operational efficiency of perception systems.

Inspired by the human sensory organs, the development of neuromorphic sensors has become the key to driving hardware systems towards intelligence and integration. In human visual and auditory organs, cells and neuronal circuits not only have the function of detecting external stimulus signals but also can preprocess the detected signals, facilitating the elimination of background interference information and accelerating the computational speed of the brain. However, given the inherent differences in the detection mechanisms of visual and auditory signals, it is currently difficult to achieve bimodal detection through a single device. Therefore, the development of novel neuromorphic sensors capable of simultaneously detecting and preprocessing “audio-visual” information is urgently needed.

Members of the Micro-Nano Manufacturing and System Integration Research Center have proposed a bionic “audio-visual” photodetector based on the graphene-germanium heterojunction field-effect transistor. Visual and auditory sensing is successfully integrated into a single device using optical methods to simulate the intelligent perception of audio-visual signals by sensory cells. By programming the gate voltage, the continuously tunable positive and negative photocurrents dynamically simulate the “excitation” and “inhibition” behaviors in neuronal pathways. As a result, the device not only preprocesses visual images by implementing operations such as inversion, sharpening, and edge extraction, but also demonstrates its ability to classify target character images. Furthermore, the device can modulate the intensity of the collected sound wave signals. Based on the “shapes” of recorded sound waves, the restoration of piano tones and audio of Chinese and English conversations is feasible. This graphene-based device, which integrates “audio-visual” signal detection and preprocessing functions, effectively simplifies the hardware structure and provides a new approach for advancing the development of miniaturized, highly integrated, multifunctional, and intelligent sensing systems.

The first author is Fu Jintao, a doctoral student from the Chongqing Academy, and Professor Wei Xingzhan is the corresponding author.

Link to relevant paper:

https://www.science.org/doi/10.1126/sciadv.adk8199